Deep learning for deforestation detection. An insight into the state of the art.

Von Manuel Hecht am 24.02.2023

The latest ‘State of the World’s Forests’ report states the importance of forests as terrestrial ecosystems – providing a host for more than 75% of the world’s terrestrial biodiversity and absorbing the equivalent of billion tons of CO2 per year: “Forests and trees make vital contributions both to people and the planet, bolstering livelihoods, providing clean air and water, conserving biodiversity and responding to climate change”(p.10; FAO 2018).

Background

Deforestation

Deforestation claims to be the major source of greenhouse gas emissions in the world. Even outranking the burning of fossil fuels, deforestation is one of the primary sources of concern regarding climate change. Studies have shown that in connection with forest fires, deforestation within the region of the Brazilian Amazon makes up 48% of the total emissions. It impacts the conservation of biodiversity and the associated ecosystem within that region directly. Further implications of deforestation are the loss of species, the reductions in seasonal rainfall, and the organization of the environment. (Ortega et al. 2019).

Remote Sensing

The usage of remote sensing imagery has been essential to keep track of deforestation.

NASA defines remote sensing as „the acquiring of information from a distance via remote sensors on satellites and aircraft that detect, and record reflected or emitted energy“ which further „enables data-informed decision making based on the current state of our planet“ (NASA 2019) It covers a cost-reductive method in order to gain a broader spectrum of data, especially within hardly accessible areas. Additionally, remote sensing has proven to be well suited to represent the condition of the forests by generating spatial maps that serve as visualizations, helping outline the severity of damage (Xulu et al. 2019).

Change Detection

Change detection is a common task within the usage of remote sensing. It defines the process of analyzing the state of an object at different times. Therefore, it can be used for understanding and tackling deforestation. A major task of change detection is to minimize noise (variations in shadows or lighting) in order to get a clear map of unchanged and changed areas. Many different change detection methods based on Machine Learning have been proposed so far. Those experiments usually use classification, that have a set of stacked temporal images as input, and use complex algorithms to determine changes. Deep Learning has become more attention regarding the use within remote sensing data due to the fact that it can automatically extract features from the image dataset, high-level semantic segmentation, nonlinear problem modeling, and mapping in complex environments. Deep Learning methods have shown better results in comparison to classic machine learning methods regarding change detection techniques. The complexity of all three dimensions of the image (temporal, spectral and spatial) and the power of pattern recognition linked to deep learning has proven to be especially effective (de Bem et al. 2020).

Deep learning algorithms that use image-based change detection learn segmentation directly from bi-temporal image pairs, thus avoiding the negative effects associated with pixel patches. The convolutional neural network (CNN) is a leading architecture among DL algorithms. Through convolutional filters, CNNs can identify patterns within an n-dimensional context with multiple abstraction levels and use them for inference instead of traditional ML algorithms (de Bem et al. 2020).

Change Detection with Deep Learning

In this section, several deep learning methods for change detection will be presented. Those algorithms are being used in several remote sensing and spatial information studies lately.

Convolutional Neural Network (CNN)

The Convolutional Neural Network (CNN) is the most commonly used deep learning method in regard to computer vision. The CNN channels the pixel characteristics in a convolutional process and creates a feature map with characteristics by resizing and pooling of the input image. At the end of this process, a fully-connected layer is attached, that could be applied to a classifier (Lee et al. 2020).

Early Fusion (EF)

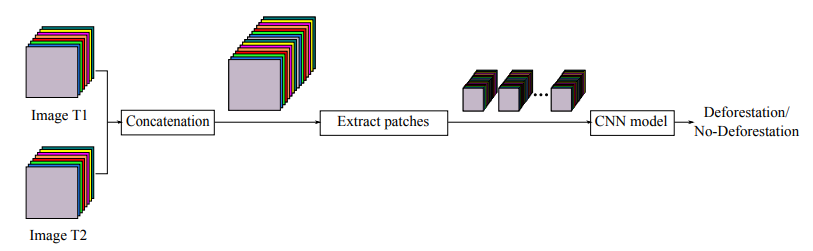

The Early Fusion approach is inspired by the CNN model created by Daudt et al. (2018), which demonstrated good results in the task of change detection within urban areas. The EF represents the concatenation of two image pairs from two different dates as the first step of the network. Subsequently, the input image will be processed by a series of seven convolutional layers and two fully connected layers. The softmax layer with two outputs carries out the final classification as shown in Figure 1. The dataset includes pairs of Landsat 8-OLI images from 2016 and 2017, by PRODES (Shimabukuro et al. 2006), that compares areas in the Brazilian Amazon where a significant deforestation process has been tracked. With the EF method an overall accuracy of 97% has been achieved Ortega et al. (2019).

Siamese Network (S-CNN)

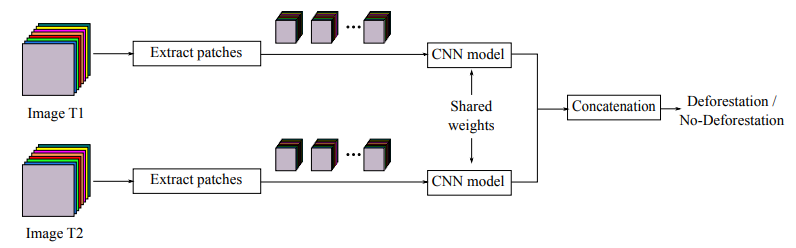

The Siamese Convolutional Neural Network is an adaptation of a traditional CNN, that includes two identical branches that share the same hyperparameters and weights values (Zhang et al. 2018) as shown in Figure 2. Daudt et al. (2018) started to implement an S-CNN for change detection in urban areas. The idea is to process the input patches through four convolutional layers with shared weights in both branches and concatenating the outputs using fully connected layers. This leads to a final feature vector that is the input for a classifier that tells if there was deforestation or no deforestation in that time span. Ortega et al. (2019) used the same dataset for the S-CNN, as they did for the EF approach, in order to compare both methods. Overall, the S-CNN was consistently better in terms of F1-Score and accuracy than the Early Fusion method.

Fully Convolutional Network (FCN)

The Fully Convolutional Network (FCN) overcomes the limitation of the CNN, for example the missing image location information by replacing the dense layers with fully connected layers (Shelhamer et al. 2016). In this section, SegNet (Badrinarayanan et al. 2016) and U-Net (Ronneberger et al. 2015), both FCN-based, are compared in a study for classifying deforestation in Korean forests (Lee et al. 2020).

U-Net

U-Net is applied mainly for the segmentation of small numbers of medical related images and was developed based on the FCN architecture. It includes an encoder and a decoder path on the. The encoder path takes an image patch with multiple channels as input and performs sub-sampling by deploying convolutional layers, ReLU activation functions and max pooling. On the other side, U-Net has two distinctive characteristics in its expansive path: the copy-and-crop step, which converts source information into contracting information using a skip connection, and convolution layers without fully connected layers in the image restoration stage. The network predicts the boundary value of the patch by mirroring the input images. By using patch units instead of sliding windows, U-Net improves its speed over previous networks. It solves the FCN problem of localization by concatenating the image using the copy-and-crop function. U-Net classified forests and non-forests, with 98.4% and 88.5% accuracy, respectively.

The overall accuracy of the U-Net model was 74.8%, which was 11.5% higher than that of SegNet (63.3%) (Lee et al. 2020).

SegNet

Compared to other methods of learning, SegNet is effective both in terms of learning speed and accuracy. Both an encoder and decoder are included within the architecture of SegNet. As part of the encoder process, images are compressed and features are extracted using rectified linear units (ReLUs). Upon its completion, the decoder process restores the image. Due to the use of the same pooling layer as in the encoder process, spatial information is preserved during the decoding process. This feature of SegNet differentiates it from other FCNs. When image reconstruction is complete, the image is classified using a softmax function.The model results showed that SegNet outperformed U-Net in the classification of hardwood forest and bare land, but in all other items, U-Net showed a higher rate of accuracy than SegNet (Lee et al. 2020).

Conclusion

Convolutional Neural Networks (CNN) and Fully Convolutional Networks (FCN) are both state-of-the-art deep learning techniques, in order to detect deforestation. While CNNs, such as the Early Fusion (EF) method and the Siamese Network (S-CNN), struggle with the limitation of information loss. The FCN methods, U-Net and SegNet, bypass those limitations by an Encoder-Decoder architecture, that exchange the Dense Layer with more Convolutional Filters. All methods delivered promising results regarding the classification of areas suffering much deforestation. As deep learning is still an aspiring technology, especially in the remote sensing field, many large deep learning libraries such as Keras , last visited on 11.11.2022} or Tensorflow\footnote{\url{https://www.tensorflow.org/}, last visited on 11.11.2022} do not provide an implementation. Nevertheless using multi-band satellite imagery in combination with computer vision and deep learning methods will be a auspicious topic for future work.

The comments are closed.